Autonomous Quadrotor Flight Using Onboard RGB-D Visual Odometry

May 31, 2014·

,

,

,

·

1 min read

,

,

·

1 min read

Roberto G. Valenti

Ivan Dryanovski

Carlos Jaramillo

Daniel Perea Strom

Jizhong Xiao

AscTec Pelican AUV with an ASUS Xtion Sensor

AscTec Pelican AUV with an ASUS Xtion Sensor

Abstract

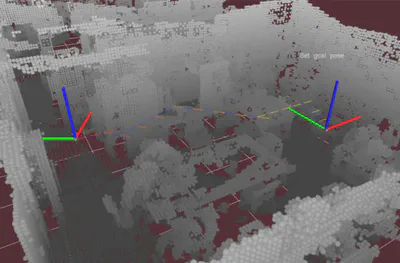

In this paper we present a navigation system for Micro Aerial Vehicles (MAV) based on information provided by a visual odometry algorithm processing data from an RGB-D camera. The visual odometry algorithm uses an uncertainty analysis of the depth information to align newly observed features against a global sparse model of previously detected 3D features. The visual odometry provides updates at roughly 30 Hz that is fused at 1 KHz with the inertial sensor data through a Kalman Filter. The high-rate pose estimation is used as feedback for the controller, enabling autonomous flight. We developed a 4DOF path planner and implemented a real-time 3D SLAM where all the system runs on-board. The experimental results and live video demonstrates the autonomous flight and 3D SLAM capabilities of the quadrotor with our system.

Type

Publication

In International Conference on Robotics and Automation (ICRA), IEEE.

Four-dimensional path (blue) in a cluttered indoor environment (map) built online with the visual odometry algorithm using the RGB-D sensor.