GUMS: A Generalized Unified Model for Stereo Omnidirectional Vision (Demonstrated Via a Folded Catadioptric System)

Oct 9, 2016·

,

,

·

1 min read

,

,

·

1 min read

Carlos Jaramillo

Roberto G. Valenti

Jizhong Xiao

Abstract

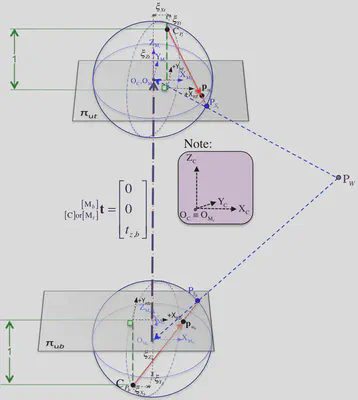

This paper introduces GUMS, a complete projection model for omnidirectional stereo vision systems. GUMS is based on the existing generalized unified model (GUM), which we extend in order to satisfy a tight relationship among a pair of omnidirectional views for fixed baseline sensors. We exemplify the proposed model’s calibration via a single-camera coaxial omnistereo system in a joint bundle-adjusted fashion. We compare our coupled method against the naive approach where the calibration of intrinsic parameters is first performed individually for each omnidirectional view using existing monocular implementations, to then solve for the extrinsic parameters as an additional step that has no effect on the intrinsic model solutions initially computed. We validate GUMS and its calibration effectiveness using both real and synthetic systems against ground-truth data. Our calibration method proves successful for correcting the unavoidable misalignment present in vertically-configured catadioptric rigs. We also generate 3D point clouds employing the calibrated GUMS systems in order to demonstrate the qualitative outcome of our contribution.

Type

Publication

In International Conference on Intelligent Robots and Systems (IROS), IEEE.